Ichizoku is on a mission to help Japanese companies adopt AI more quickly and with greater success. That is why we partnered with Arize AI, the leader in LLM and ML observability. In this blog by Cam Young, an ML Sales Engineer at Arize, Cam dives into why embracing these technologies is more than a trend – it’s a strategic imperative as we head into 2024.

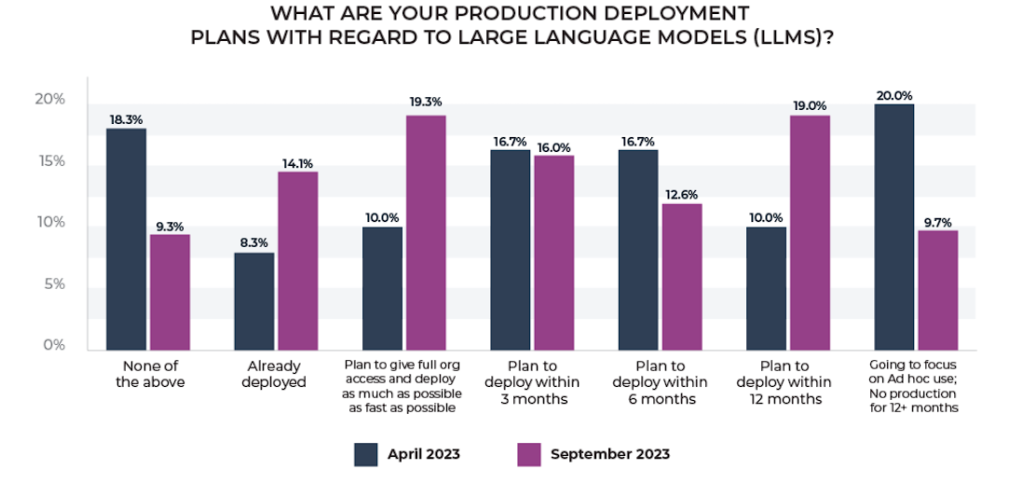

With a staggering 61.7% of enterprise engineering teams gearing up to deploy LLM applications, the urgency to act is clear. But deployment is not without its challenges. Cam also reviews some keys to being successful with your deployments. Enjoy the article.

Jay Revels

CEO of Ichizoku

Cam Young, ML Sales Engineer at Arize

From better customer service to more rapid drug discovery, generative AI is quickly reshaping industries. According to a recent survey, 61.7% of enterprise engineering teams now have or are planning to have a large language model application deployed into the real world within a year – with over one in ten (14.7%) already in production, compared to 8.3% in April.

Given the rapid rate of adoption, some early growing pains are inevitable. Among early adopters of LLMs, nearly half (43%) cite issues like evaluation, hallucinations, and needless abstraction as implementation challenges. How can large enterprises overcome these challenges to deliver results and minimize organizational risk?

Here are three keys that enterprises successfully deploying LLMs are embracing to rise to the challenge.

Taking An Agnostic Approach To a Changing Landscape

An engineering team that spends a month building a piece of infrastructure that only connects to one foundation model (i.e. OpenAI’s GPT-4) or orchestration framework (i.e. LangChain) may quickly find their work – or even entire business strategy – rendered obsolete. Ensuring that a company’s LLM observability and stack is agnostic and easily connects to major foundation models and tools can minimize switching costs and friction.

Operationalizing LLM Science Experiments

In a space where foundation model providers offer their own evals (effectively grading their own homework), it is important to develop or leverage independent LLM evaluation. Arize’s exclusive focus on observability means the company’s LLM evals and other tools can be trusted to objectively navigate LLMs. That objectivity – coupled with a team of data scientists and machine learning platform engineers – can provide a solid foundation for organizations to rapidly automate and operationalize hundreds of scientific experiments for LLM use cases, ensuring reliability in production and responsible use of AI across the enterprise.

Quantifying ROI and Productivity Gains

Implementing generative AI can be difficult and time-intensive given model complexity and novelty. Ensuring systems exist for the detection of LLM app performance issues impacting revenue – with associated workflows to proactively and automatically surfacing the root cause – is important. Here, open source and other tools can help minimize disruption through interactive and guided workflows like UMAP, spans and traces, prompt playgrounds, and more.

Conclusion

As the generative AI field continues to evolve, it can be difficult to balance the obligation to deploy LLM apps reliably and responsibly with the need for speed given the unique competitive pressures of the moment. Hopefully these three keys for leaders in navigating large language model operations (LLMOps) landscape can help as we head into a new year – and a new era!